How’s the man?

2021/03/01

This is another blog about Raspberry PI, and today I want to show how I did a simple Kafka cluster demo using Sense Hat & GFX Hat.

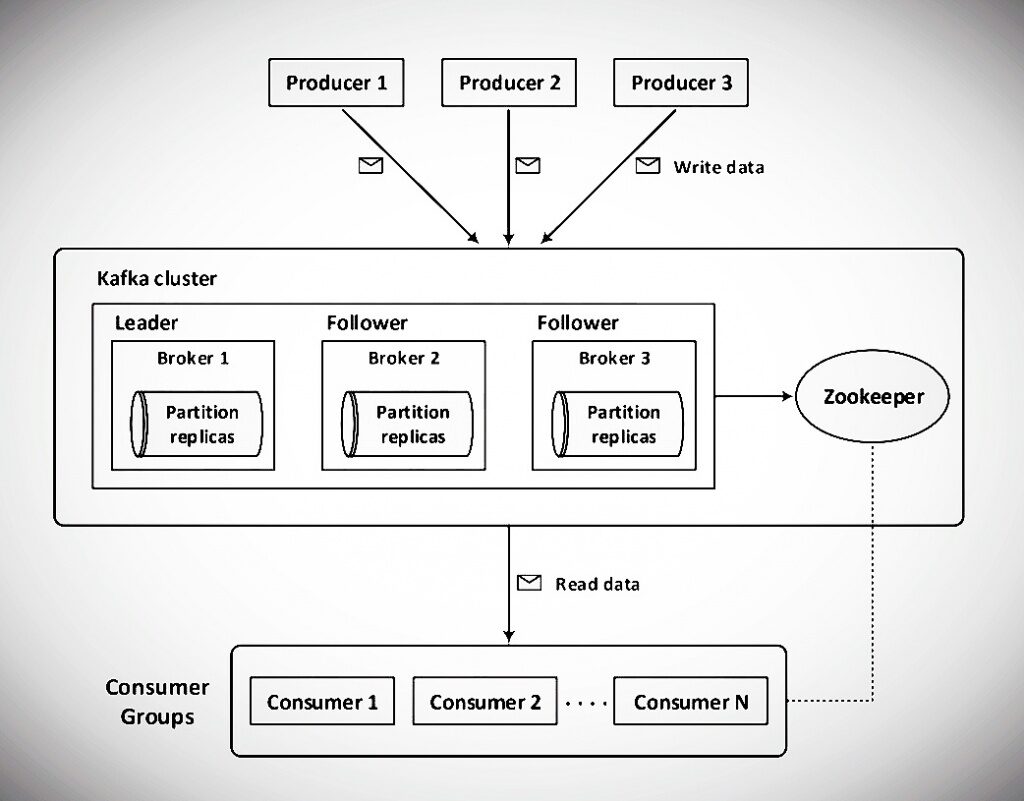

If you’re not familiar with Kafka, I suggest you have a look at my previous post What is Kafka? before, and you can have a look at how I created the Kafka cluster here.

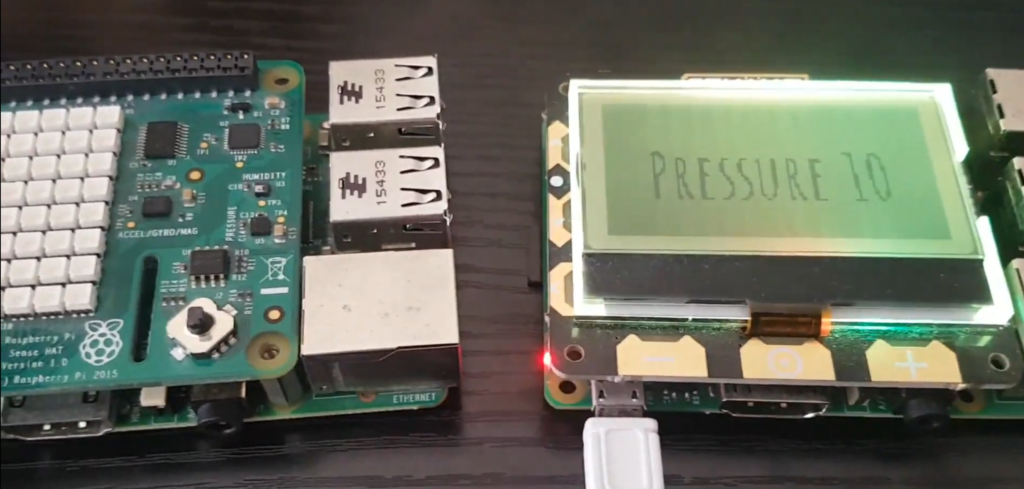

The Sense HAT is an add-on board for Raspberry Pi, tha has an 8×8 RGB LED matrix, a five-button joystick and includes the following sensors: Gyroscope, Accelerometer, Magnetometer, Barometer, Temperature sensor and Relative Humidity sensor.

You can learn more about Sense hat in my previous blog.

The GFX HAT is an add-on board for Raspberry Pi, tha has a 128×64 pixel, 2.15″ LCD display with snazzy six-zone RGB backlight and six capacitive touch buttons. GFX HAT makes an ideal display and interface for your headless Pi projects.

You can learn more about GFX hat here and check the API here.

The idea here is to focus on scenarios where the Kafka clients and the Kafka brokers are running on the edge. This enables edge processing, integration, decoupling, low latency, and cost-efficient data processing.

Edge Kafka is not simply yet another IoT project using Kafka in a remote location. Edge Kafka is an essential component of a streaming nervous system that spans IoT (or OT in Industrial IoT) and non-IoT (traditional data-center / cloud infrastructures).

Multi-cluster and cross-data center deployments of Apache Kafka have become the norm rather than an exception. A Kafka deployment at the edge can be an independent project. However, in most cases, Kafka at the edge is part of an overall Kafka architecture.

Apache Kafka is the New Black at the Edge in Industrial IoT, Logistics, and Retailing

Idea

A Raspberry Pi 2 nodes Kafka cluster, with a Micronaut Kafka producer that gets sense hat data and a Quarkus Kafka consumer that puts the result in a REST that GFX Hat reads using python API.

The Micronaut producer gets the Sense Hat humidity, pressure, and temperature values and sends it to a Kafka topic.

The Quarkus consumer reads a Kafka topic and generates a REST interface with the last topic value. I used the GFX Hat to display the result.

You can get the full Micronaut Kafka Producer code on my GitHub.

You can get the full Quarkusl Kafka Consumer code on my GitHub.

Results

A Raspberry 2 nodes Kafka cluster.

Micronaut kafka producer get sense hat data and quarkus kafka consumer put the result in a REST that GFX Hat read using python API#raspberrypi #raspberry#sensehat #gfxhat #Java #python#micronaut#quarkus#apachekafka #kafka@Raspberry_Pi pic.twitter.com/0zuohbaELd— Igor De Souza (@Igfasouza) August 12, 2020

Kafka is a great solution for the edge. It enables deploying the same open, scalable, and reliable technology at the edge, data center, and the cloud. This is relevant across industries. Kafka is used in more and more places where nobody has seen it before. Edge sites include retail stores, restaurants, cell towers, trains, and many others.